The following are highlights of some of the recent research that has been conducted by our students.

Mobile Application Development

Ocean Mapping

The following video demonstrates a mobile app version of the Mid-Atlantic Ocean Data Portal that will allow Android and iPhone users to search and view thousands of maps showing natural features and human activities at sea. The app was developed for CS-492 – Senior Project.

Artificial Intelligence, Robotics

NAO Robot

NAO is an autonomous and programmable robot with a long list of possible functions that involve topics such as human interaction, object sensing, and humanoid movements. NAO can be programmed by a user using the Python programming language, and the Choreograph desktop application.

Real-Time Control

The following video shows SE-641 (Real-Time Robot Control) final projects involving real-time robotic control using NAO. The projects demonstrated are: 1) robot calculator, 2) grab and carry objects, and 3) play rock-paper-scissors.

Dialogue and Face Recognition

In the following project, students have programmed NAO to demonstrate several ways in which the robot can converse with humans, and how the robot can adapt its conversations based on a face it recognizes. This project sets up a strong base for students to build on to explore more complex problems with NAO.

Deep Learning-Based Hand Gesture Recognition and Drone Flight Controls

In this master’s degree thesis project a hand gesture recognition system is designed and developed for the control of flights of unmanned aerial vehicles (UAV). To train the system to recognize designed gestures, skeleton data collected from a Leap Motion Controller are converted to two different data models. As many as 9,124 samples of training dataset, 1,938 samples of testing dataset are created to train and test the proposed three deep learning neural networks, which are a 2-layer fully connected neural network, a 5-layer fully connected neural network and an 8- layer convolutional neural network. The static testing results show that the 2-layer fully connected neural network achieves an average accuracy of 98.2% on normalized datasets and 11% on raw datasets. The 5-layer fully connected neural network achieves an average accuracy of 95.2% on normalized datasets and 45% on raw datasets. The 8-layers convolutional neural network achieves an average accuracy of 96.2% on normalized datasets and raw datasets. Testing on a drone-kit simulator and a real drone shows that this system is feasible for drone flight controls.

Motion Control of Robots Using Speech

This is a graduate class project. Students were asked to design and develop a real-time application in the Linux environment to control the motion of two robots. The motion of the robots are purely controlled by speech, and thus a speech recognition system is also developed to produce control commands.

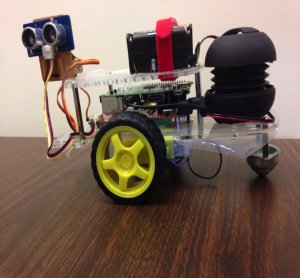

A Robot to Improve Verbal Communication Skills of Children with Autism

(In collaboration with Dr. Patrizia Bonaventura of the Speech Pathology Department)

Children diagnosed with autism typically display difficulty in the areas of social interaction and verbal communication. Various studies suggest that children with autism respond better to robots than to humans. Another reason is the special interest of autistic children in electronic and mechanic devices. We have been experimenting with various types of robots. In this project, we are seeking answers to the following questions: Can an interactive robot help children with autism to respond in interpersonal communication? Does the children’s interaction with a human differ from the interaction with a robot? How does the form of the robot affect the children’s interaction with it?

Data Science, Natural Language Processing, Image Processing

Informed and Timely Business Decisions – A Data-driven Approach

One of the main characteristics of business rules (BRs) is their propensity for frequent change, due to internal or external factors to an enterprise. As these rules change, their immediate dissemination across people and systems in an enterprise becomes vital. The delay in dissemination can adversely impact the reputation of the enterprise, and cause significant loss of revenue. The current BRMS are often maintained by the IT group within a company, therefore the modifications of the BRs intended by executive management would not be instantaneous, since they have to be coded and tested before being deployed. Moreover, the executives might not have the possibility to take the best decisions, without having the benefit of analyzing historical data, and the possibility of quickly simulating what-if scenarios to visualize the effects of a set of rules on the business. Some of the systems that provide this functionality are prohibitively expensive. This project proposes to solve these challenges by using the power of Big Data analysis to source, clean and analyze historical data that is used for mining business rules, which can be visualized, tested on what-if scenarios, and immediately deployed without the intervention of the IT group. The proposed approach is using open source components to mine stop loss rules for financial systems.

Touristic Sites Recommendation System

Recommending touristic places for visitors is an important service of touristic websites. Most websites allow visitors to either numerically rate or leave a review about the site they visited to express their opinion. Those reviews help future visitors to choose the place that is the most attractive to them and at the same time allow the touristic place owner to improve and increase the visitor’s satisfaction. Sometimes, the amount of reviews can be overwhelming, discouraging intended visitors to manually read all the reviews to draw a conclusion. Relying only on star-rating of the touristic places can be misleading, as it lacks the context and details of the reviews. This is why we propose a semantic based recommender system that relies on natural language processing and big data analysis of the visitors’ reviews. The touristic places recommendation uses extracted keywords from data that is “cleaned”, together with sentiment analysis of the reviews, and context sensitive information such as geographic location, temporal selection and keywords translation.

Link-Analysis

Link-Analysis is a project to extract who, what, when, where, why, and how data from Wikipedia. Beyond extracting the data and getting it into a form for use, this project has a lot of interesting data processing issues, such as recognizing that the sentence ‘George Washington Carver was named after George Washington’ is discussing two different people. The data extract can be used for a wide number of purposes.

Devdas

Devdas (a Sanskrit word meaning Servant of the Gods) is intended to be an ‘Alexa’ on steroids. It is a platform of cooperating intelligent agents which can exploit current advances in computer science: deep learning, big data, vision, and even quantum computing. There are currently plans to incorporate at least 30 different agents in the platform, which leaves more than enough work to keep the students busy.

BioSimVis

BioSimVis is a vision processing program that is being developed to taking into account how a biological entity processing visual information. The actual process is far different than how computers currently address the problem. The computer tries to process an entire scene while the eye focuses on a smaller region to process (saccades). The digital image is recto-linear while the nerves on the back of the eye are arranged in phyllotactic structure. A second part of the program is to detect human emotion from image data. This project will be using the Woody Allen movie, Hannah and Her Sisters, as the training and testing dataset.

Rapid Homoglyph Prediction and Detection

As technology permeates the globe, it is becoming necessary to support an increasing number of languages, and hence character sets. Many character sets contain characters that look similar or identical to characters from other character sets (known as homoglyph characters). This poses a number of unique problems, such as the homoglyph URL attacks frequently used in phishing scams. The previously proposed solutions to these problems have mostly focused on restricting the characters available to users. This project proposes a new approach which can predict and detect homoglyphs with a high level of accuracy. This methodology simulates the human behavior of “visual scanning” and allows large amounts of visual data to be represented in a format that is easily and quickly searchable. Additionally, it employs a pre-processing time-memory trade-off to enable the detection and prediction of homoglyphs in fractions of a second.

Improving Text Mining with Enhanced Named-Entity Recognition

We are using a state-of-the-art parser to process natural language texts such as news articles. The parser uses a named-entity recognizer (NER) to identify phrases as names of people, organizations, and locations. DBpedia is a semantically organized database that is automatically created from the structured content of Wikipedia. In accordance with the principles of the Semantic Web, DBpedia has a large ontology that further categorizes people, organizations and locations. By accessing the latest archives of DBpedia, we are experimenting with matching the named entities found in the text with those categorized by DBpedia to obtain a finer-grained categorization. For example, this technique allows us to identify people as scientists or politicians, and organizations as companies or universities. Our goal is to use this information to improve the performance of text clustering and classification methods.

Urban Data Analysis

Data Analytics involves the study and application of algorithms and methodologies to discover insights from data. Specifically, our research activity is focused on the analysis of urban data, aimed at predicting crime patterns and user mobility behaviors. Such predictive models can be profitably used to improve resource furnishing and management of city resources, to anticipate criminal activity, to predict mobility patterns and avoid traffic congestion.

Information Security

Privacy Control of Social Networking.

Social media is a de facto standard in online communication. When users interact with others on a large scale, privacy plays an increasingly important part in maintaining safety and user confidence in a social media platform. Allowing end-users to control their interactions and data with others is a way to inspire end-user confidence. Many control schemes exist; however, they are flawed on two key points. These systems are somewhat confusing to the end users in that the users may not understand exactly what information is being shared with others and that the control schemes are hidden and abstract. In this project, we propose an idea with a prototype, named Solendar, that attempts to solve the issue with a special authorization approach. It has a pass system which allows end-users to explicitly specify which users are able to see and interact with their information on individual level. It has a group system that builds on the pass system, allowing end-users to organize other users into logical segments for effective information sharing while retaining the control of privacy.